Day 20 — Make Javascript run faster with this simple trick!

06 September 2020 · recurse-center TweetToday I paired with Ezzeri to look at some of his Exercism Rust track solutions. The discussion moved to WebAssembly and we went through some minimal examples he has been working on. He's also written a nice blog post which has some beginner level WebAssembly resources.

The whole exercise filled me with the same level of WebAssembly excitement that I had after watching talks by Russell Keith-Magee and David Beazly last year. (I got the chance to see them live at PyCon Australia and India!) Today I ended up watching the talks again. What follows is a lossy captioning of a subset of Russell's talk.

The runtime performance of Javascript engines has seen a lot of attention because Javascript is so important to the end-user experience in the browser.

He shows this simple example:

function add(first, second) {

return first + second;

}

for (i = 0; i < 1000; i++) {

add(37, 42);

}

Once the code is executing, the Javascript interpreter can identify its pattern of execution. It can see that the

addmethod is only being invoked with integers, and can therefore develop an optimized interpretation of theaddmethod that will only do integer math. Because of the narrowed scope, the optimized version is faster as it is close to the bare metal machine language instructions that the computer actually needs to execute.This is the same thing a compiler does in a compiled language, but it is happening at runtime. For that reason this process is called just-in-time compilation, because the conversion to machine language is happening "just in time" for the code to execute. It's in contrast to the ahead-of-time compilation in a compiled language. A just-in-time or JITing interpreter will optimize the code at runtime to make it run faster. The question then becomes: can we game the system? Can we do something to our code that will make it easier for the interpreter to identify possible optimizations?

Yes! For example, in Javascript the result of a logical

orwith an integer is always an integer. If weoran integer with0, we get the original integer. If weorboth of our function's arguments with0, the interpreter can know that the addition operation must be an integer operation.

function add(first, second) {

first = first|0;

second = second|0;

return (first + second)|0;

}

for (i = 0; i < 1000; i++) {

add(37, 42);

}

This is something that has a direct interpretation to machine language, and so it runs faster. That's a neat trick, but why do we care? And since we have to deface our code pretty badly in order to realize that optimization, is it really worth it? If you're looking at this as a way to speed up your handwritten Javascript, then probably not.

But think about what we've just been able to do. By doing nothing more than "annotating" our Javascript code, we have been able to convince the Javascript interpreter to give us near direct access to optimized CPU-level integer arithmetic. It's a very roundabout way of expressing it, and the optimization doesn't actually happen till the code is running, but we have used an interpreted language to expose a primitive machine language construct! If we can expose one machine language construct, can we expose all capabilities of a machine language?

Few years ago, a team at Mozilla looked at the Javascript language as a whole to determine if this can be done. And what they came up with is called asm.js. It uses a subset of everything that's in the full Javascript specification. The code is covered with annotations and weird coding conventions like the "logical

orwith0" from above. When used together, those annotations and conventions provide a set of lower level primitives for performing integer and floating-point arithmetic, allocating memory, defining and invoking functions, and so on. They have effectively defined a way to expose machine language capabilites of a CPU using nothing but a platform-independent interpreted language (Javascript), making it kind of a cross-CPU assembly language.But even

asm.jscan be improved upon because it still is just Javascript code. It needs to be transmitted in text format, then parsed, then interpreted, then executed, and then JITed. If we know ahead of time that our code will be compatible with that fast Javascript subset, can we send our code to the browser in a ready-to-use format? That's whatWebAssembly(WASM) solves.WASM is a binary format formalizing the

asm.jslanguage subset in a format that can be delivered to the browser in a pre-parsed, "pre-hinted for JITing purposes" format that makes it smaller to transmit compared toasm.jscode. It is faster to start because you don't have to parse the code, and it's more consistent in operation because you're telling the JITing interpreter exactly what operations to execute and how to optimize them. To use a WASM binary, you do need to write some Javascript.

fetch("./add.wasm").then(

response => response.arrayBuffer()

).then(

bytes => WebAssembly.instantiate(bytes)

).then(

mod => {

output.textContent = mod.instance.exports.add(1, 2);

}

).catch(console.error);

This code will run on any browser that has been released in the last 2 years. And just as very few people write assembly code anymore, very few people need to write WASM. A much easier approach is to use a compiler for a language that can target WASM. For example, Rust.

Later in the talk he also mentions that:

One of the major reasons that people write C modules for Python is for performance reasons. They rewrite a hot loop in their Python code using C to make it run faster. But using a C module means your Python code can no longer work cross-platform, at least not without recompilation[1]. But if you compile your C module into WASM, you get a cross-platform binary. All you need then is the ability to invoke that cross-platform binary from your Python code, and that's where wasmer can help you.

[1] From what I understand, you need to build Python wheels for a combination of different platforms (Windows, MacOS, and Linux) and architectures (x86, arm, and whatnot).

Javascript has the potential to actually be a universal computing platform, not because of Javascript the language, but because of the importance of the web as a platform, and all of the effort that has been put into optimizing Javascript runtimes as a result. Almost by accident we have ended up with WASM, a mechanism for describing cross-platform native executables and that ultimately is better for everyone, because it abandons the idea that there is one programming language to rule them all, lets every language find its own niche, and lets other languages, including Python, coexist and cooperate with that ecosystem.

"And lets other languages, including Python, coexist and cooperate with that ecosystem" is along the same lines David Beazly ended his PyCon India keynote, after live coding a WASM interpreter in Python, which can execute a WASM binary compiled from Rust. All of this is super exciting!

After that, I decided to play with the minimal WASM examples Ezzeri has written. I'd already set up the Rust toolchain last week, so I just needed to install wasmtime which lets you execute a WASM binary.

$ curl https://wasmtime.dev/install.sh -sSf | bash

Installing latest version of Wasmtime (dev)

Checking for existing Wasmtime installation

Fetching archive for Linux, version dev

Creating directory layout

Extracting Wasmtime binaries

Editing user profile (/home/vinayak/.config/fish/config.fish)

Finished installation. Open a new terminal to start using Wasmtime!

There's already a Rust example in the repo which contains a function for adding two numbers.

fn add(x: i32, y: i32) -> i32 {

x + y

}

fn main() {

println!("{}", add(1, 2));

}

You can add the wasm32-wasi target, compile the above example to WASM, and execute the resulting binary using wasmtime.

$ rustup target add wasm32-wasi

info: downloading component 'rust-std' for 'wasm32-wasi'

info: installing component 'rust-std' for 'wasm32-wasi'

info: Defaulting to 500.0 MiB unpack ram

$ rustc add.rs --target wasm32-wasi

$ ls

add.wasm

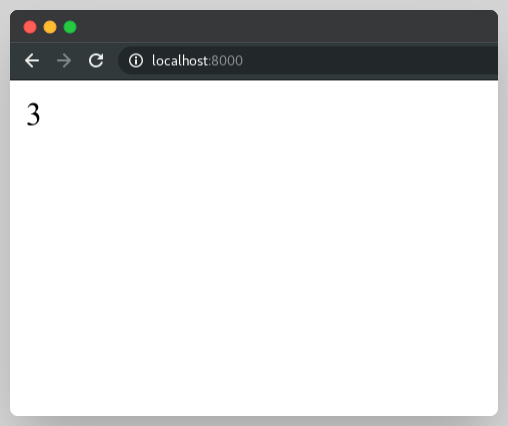

$ wasmtime add.wasm

3

In the second example, he defines the same function with pub extern to make it a part of the module's public interface.

#[no_mangle]

pub extern fn add(x: i32, y: i32) -> i32 {

x + y

}

Ezzeri also told me about the wasm32-unknown-unknown target for Rust, which can be used to compile code once, and run it on any platform or architecture!

$ rustup target add wasm32-unknown-unknown

info: downloading component 'rust-std' for 'wasm32-unknown-unknown'

info: installing component 'rust-std' for 'wasm32-unknown-unknown'

info: Defaulting to 500.0 MiB unpack ram

$ rustc add.rs --target=wasm32-unknown-unknown --crate-type=cdylib

$ ls

add.wasm

The resulting binary can be invoked from within Javascript using the code that Russell showed in his talk.

fetch("./add.wasm").then(

response => response.arrayBuffer()

).then(

bytes => WebAssembly.instantiate(bytes)

).then(

mod => {

output.textContent = mod.instance.exports.add(1, 2);

}

).catch(console.error);

$ python -m http.server

Right now, Camelot uses Ghostscript to do a PDF to PNG conversion (for processing the resulting image to find lines) when someone uses the lattice flavor. The problem here is that users need to install Ghostscript separately, and a lot of them run into installation problems.

One of my goals for the batch is to make Camelot self-contained by removing Ghostscript as a dependency. Maybe one of the ways to do that would be to compile Ghostscript (or a small subset) to WASM using emscripten, package the resulting binary with Camelot, and invoke it using wasmer. Or maybe rewrite the subset that does the PDF to PNG conversion in Rust, compile it to the wasm32-unknown-unknown target, and do the same "package and invoke". Or maybe go the Python extension route with the Rust subset, which would require building wheels for a combination of different platforms and architectures.

If you're reading this and have any pointers, let's talk!