Airflow, Meta Data Engineering, and a Data Platform for the World’s Largest Democracy

25 August 2018 · apache · airflow TweetI originally wrote this post for the SocialCops engineering blog, and then published it on Hacker Noon.

In our last post on Apache Airflow, we mentioned how it has taken the data engineering ecosystem by storm. We also talked about how we’ve been using it to move data across our internal systems and explained the steps we took to create an internal workflow. The ETL workflow (e)xtracted PDFs from a website, (t)ransformed them into CSVs and (l)oaded the CSVs into a store. We also touched briefly on the breadth of ETL use cases you can solve for, using the Airflow platform.

In this post, we will talk about how one of Airflow’s principles, of being ‘Dynamic’, offers configuration-as-code as a powerful construct to automate workflow generation. We’ll also talk about how that helped us use Airflow to power DISHA, a national data platform where Indian MPs and MLAs monitor the progress of 42 national level schemes. In the end, we will discuss briefly some of our reflections from the project on today’s public data technology.

Why Airflow?

To recap from the previous post, Airflow is a workflow management platform created by Maxime Beauchemin at Airbnb. We have been using Airflow to set up batching data workflows in production for more than a year, during which we have found the following points, some of which are also its core principles, to be very useful.

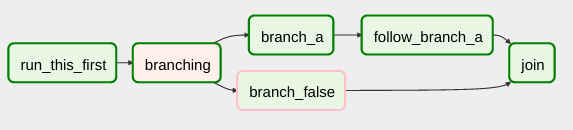

- Dynamic: A workflow can be defined as a Directed Acyclic Graph (DAG) in a Python file (the DAG file), making dynamic generation of complex workflows possible.

-

Extensible: There are a lot of operators right out of the box! An operator is a building block for your workflow and each one performs a certain function. For example, the PythonOperator lets you define the logic that runs inside each of the tasks in your workflow, using Python!

-

Scalable: The tasks in your workflow can be executed parallely by multiple Celery workers, using the CeleryExecutor.

-

Open Source: The project is under incubation at the Apache Software Foundation and being actively maintained. It also has an active Gitter room.

Furthermore, Airflow comes with a web interface that gives you all the context you need about your workflow’s execution, from each task’s state (running, success, failed, etc.) to logs that the task generated!

The problem with static code

Here at SocialCops, we’ve observed a recurring use case of extracting data from various systems using web services, as a component of our ETL workflows. One of the ways to go forward with this task is to write Python code, which can be used with the PythonOperator to integrate the data into a workflow. Let’s look at a very rudimentary DAG file that illustrates this.